Recently there has been a lot of concern, and press, around research indicating ethnic bias in Face Recognition. This resonates with me very personally as a minority founder in the face recognition space.

So deeply in fact, that I actually wrote about my thoughts in an October 2016 article titled “Kairos’ Commitment to Your Privacy and Facial Recognition Regulations” wherein I acknowledged the impact of the problem, and expressed Kairos’ position on the importance of rectification.

I felt then, and now, that it is our responsibility as a Face Recognition provider to respond to this research and begin working together as an industry to eliminate disparities. Because when people become distrustful of technology that can positively impact global culture, everyone involved has a duty to pay attention. And take action.

What’s happening?

Joy Buolamwini of the M.I.T. Media Lab has released research [1] on what she calls “the coded gaze”, or, algorithmic bias. Her findings indicate gender and skin-type biases in commercial face analysis software. The extent of these biases are reflected in an error rate of 0.8 percent for light-skinned men, and as high as 34.7 percent for dark-skinned women. Specifically, Face Recognition algorithms made by Microsoft, IBM and Face++ (the three commercial systems on which Buolamwini tested) were more likely to misidentify the gender of black women than white men.

When such biases exist, there can be far reaching implications as a result. In my 2016 article I cited that The Center for Privacy & Technology at Georgetown University’s law school found that over 117 million American adults are affected by our government's use of face recognition-- with most of the affected American adults being African Americans.

For law enforcement systems relying on Face Recognition to identify suspects using mug shot databases-- accuracy, particularly in the case of dark skinned people, can mean the difference between disproportionate arrest rates and civil equality.

Pilot Parliaments Benchmark (PPB) consists of 1,270 individuals from three African countries (Rwanda, Senegal, and South Africa) and three European countries (Iceland, Finland, and Sweden), selected for gender parity in the national parliaments. Credit: Joy Buolamwini

Sakira Cook, counsel at the Leadership Conference on Civil and Human Rights, points out that “The problem is not the technologies themselves but the underlying bias in how and where they are deployed”. Certainly, the biases that exist in law enforcement pre exist Face Recognition technology, yet when the systems themselves are unintentionally biased due to improperly trained algorithms, the combination can be quite damaging.

And as industries like Marketing, Banking, and Healthcare integrate Face Recognition into their decision making processes based on demographic insights around consumer preference, lending practices, and patient satisfaction-- it is crucial that the systems delivering the metrics used to generate these insights, be as precise as possible.

Solving this problem will have a ripple effect on the technology

The immediate importance of rectifying this problem is obvious. Yet there is a secondary, and remarkably important ramification which hinders on the resolution of these biases.

Public sentiment around Face Recognition

Hate it or love it, AI and machine learning driven Face Recognition and Human Analytics are becoming the standard for establishing metrics to be used for insights across industries around the world. The absolute need to have honest dialogue around the troubling inefficiency of algorithms to properly identify women/people of color, anyone, is the exact catalyst needed to effectuate change.

This is so critical, because the positive, culture changing effect that Face Recognition will have globally is profound beyond practical application. As mainstream adoption of the technology for business and personal use like the Apple iPhone X is being fast tracked, the subsequent social response, as in the discussion of bias we are now having, is creating a global framework for 21st century ethnic classification expectations and standards. At Kairos, we were made very aware of this evolution after the release of our Diversity & Ethnicity app.

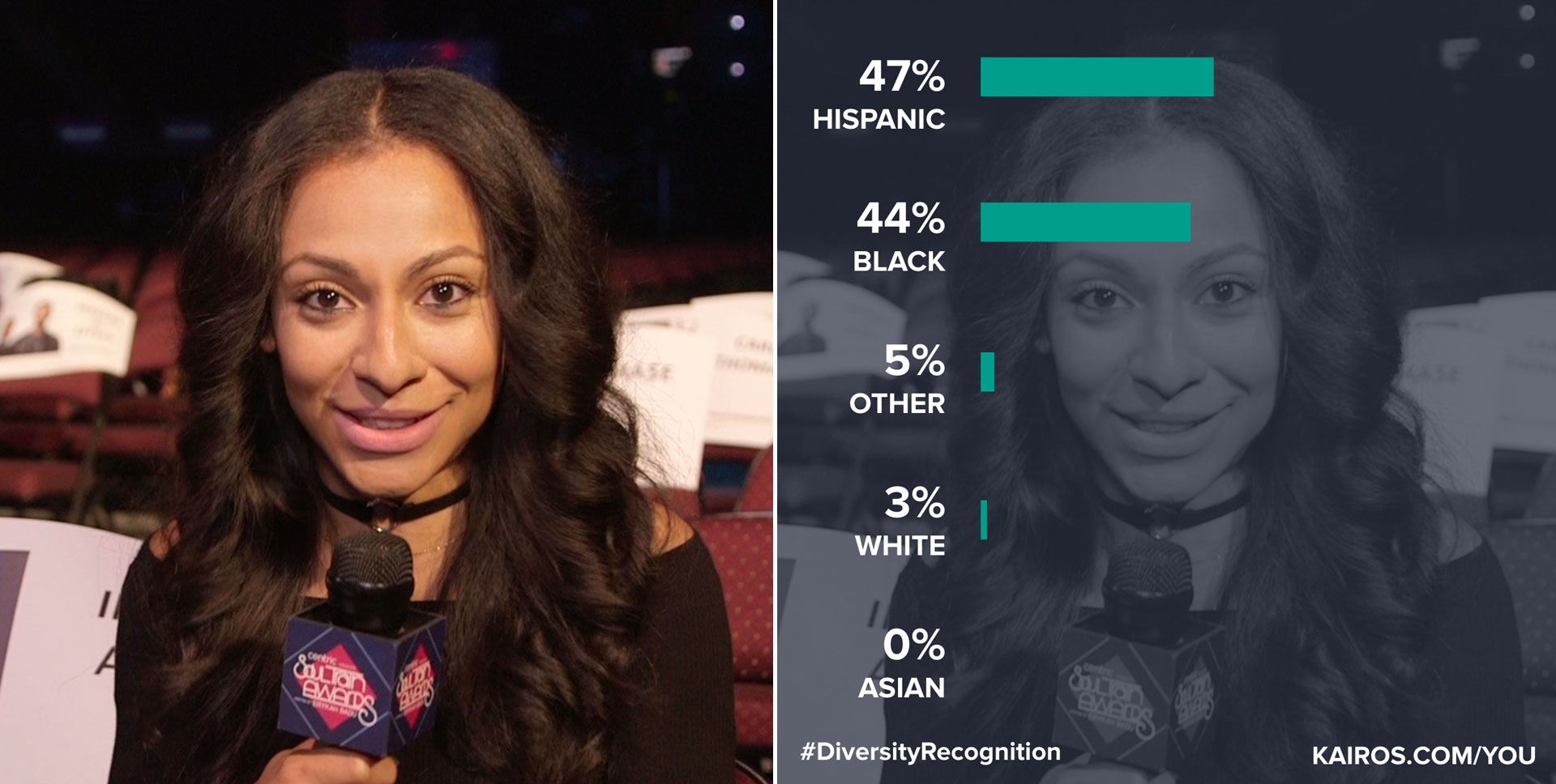

"User response has ranged from amazement and praise, to displeasure and offense. And we totally understand why. While most users will get a spot-on result, we acknowledge that the ethnicity classifiers currently offered (Black, White, Asian, Hispanic, ‘Other’) fall short of representing the richly diverse and rapidly evolving tapestry of culture and race."- Diversity Gone Viral, 2017

Our Ethnicity & Diversity app was used by millions of curious people from all parts of the world. We highlighted some of what we learned from them in “Diversity Gone Viral”. The information users shared with us ranged from praise and extreme critique of our app, to letting us know how they identify and prefer to be addressed in terms of ethnic classification. It was amazing.

Example result from the Kairos Diversity Recognition app

At Kairos, we LIVE for this kind of feedback and use it to improve our product, thereby improving public sentiment around a technology that is still in the process of earning trust. And I would also like to be clear about the fact that we don't find it any less concerning when Face Recognition fails to identify a man of mixed Asian and European descent, or of ANY ethnicity-- than when it fails to identify a woman of African descent. In any case of misidentification, the person expecting an accurate result may be left feeling offended, and perhaps more troublesome, misclassified in a database.

What can be done?

Fortunately, the matter of algorithmic ethnic bias, or “the coded gaze” as Buolamwini calls it, can be corrected with a lot of cooperative effort and patience while the AI learns. If you think of machine learning in terms of teaching a child, then consider that you cannot reasonably expect a child to recognize something or someone it has never or seldom seen. Similarly, in the case of algorithmic ethnic bias, the system can only be as diverse in its recognition of ethnicities as the catalogue of photos on which it has been trained.

That said, offering the algorithms a much more diverse, expansive selection of images depicting dark skinned women, various other shades of color, and individuals identifying as “mixed” (which includes MANY ethnicities) will close the gap found in the M.I.T Media Lab research.

"...every tool ever invented is a mixed blessing. How things will balance out is a matter of vigilance, moral courage, and the distribution of power.”

- 'The Cult of Information', by Theodore Roszak, Author and Professor.

Trust the process

While we are having the conversation about the shortcomings and necessary improvements in Face Recognition, I feel it's important to remember that this technology is relatively new and ever evolving/improving in terms of expansion into areas like ethnicity identification. Not to mention, there is a margin for error in any system. Including the human eye!

Improve the data

Why not implement a “bias standard” by requiring a proactive approach to gathering, training, and testing data from a population that is truly representative of global diversity. In combination with examples of natural poses, variant lighting, angles, etc-- this “standard” will insure systems operate with more precision and reliability around ethnicity.

Seek constant feedback

The industry has got to get out of the lab and stop building things in isolation. Being inclusive and engaging wider communities will enable us to see how our AI is being perceived in the real world. It’s going to take more effort (and cost) to approach these solutions the right way-- and I’m not just talking about dodging bad PR. It breaks the functionality of the application, and what we are trying to achieve from a business POV. A global, connected economy DEMANDS we fulfil the promise of the technology we build to be respectful, trustworthy and inclusive.

MEET US AT SXSW

This March, Kairos returns to South by Southwest; the annual film, tech, and music festivals and conferences in Austin, Texas, USA. Brian will be on the panel for 'Face Recognition: Please Search Responsibly'

Looking forward…

As an educated culture we have a social responsibility to think rationally and reasonably about technology. Clickbait titles like “Facial Recognition Is Accurate, if You’re a White Guy” [2] as circulated by credible publications like The New York Times, are counter productive, sensational, and dangerous. The implication that there is a “hidden bias” in how today’s data sets are gathered further fuels the mistrust that people have for the technology.

Let’s simply acknowledge that the selection of current data sets lack representation of segments of the population, and move ahead, together, in getting these algorithms properly trained to be as inclusive of all of the ethnicities represented on our planet, as possible.

We are deeply appreciative of the M.I.T study and how it has reinvigorated Kairos’ commitment to being a responsible participant in this discussion, as we determinedly strive to be a leader of change and betterment in our industry. We invite Joy Buolamwini and her team to join us in establishing “bias standards” so that the future of Face Recognition, includes all faces.

As always, we are here, and open to answer any questions about our technology and practices.

Brian Brackeen

Brian is the CEO at Kairos, a Human Analytics platform that radically changes how companies understand people.

[1] Gender Shades

[2] Facial Recognition Is Accurate, if You’re a White Guy